Forget incremental upgrades, this is the year AI becomes dramatically cheaper and radically more powerful.

The Unseen Revolution at CES 2026

CES has long been the showcase for tomorrow’s most charismatic technologies, but the true headline in early 2026 was NVIDIA’s formal induction into the AI systems era, an evolution from producing discrete chips to delivering fully integrated AI platforms engineered to slash costs, accelerate workloads, and tackle the physical world itself.

At the core of this transformation is Vera Rubin, a smart, scalable, rack‑level AI platform named in honor of the pioneering astronomer whose work reshaped our understanding of dark matter, a poetic nod to the invisible but powerful forces fueling today’s AI expansion.

Section 1: Why Vera Rubin Matters, Not Just More Power, But Better Economics

Over the past decade, NVIDIA’s GPU evolution, from CUDA pioneers to Blackwell‑class AI accelerators, has been marked by methodical performance gains. Yet, even as models grow into the hundreds of billions or trillions of parameters, economic, specifically, cost‑per‑inference, has become the most critical barrier to adoption.

Vera Rubin aims directly at this constraint.

10× Lower Inference Costs

NVIDIA claims the Rubin platform achieves up to ten times lower inference token cost compared with its Blackwell predecessors. That’s not incremental: it reshapes what kinds of AI applications are economically viable, from always‑on assistants to complex, multi‑agent reasoning systems.

In simple terms, one “token” in AI represents a unit of work, roughly a word or image fragment the model must process. Reducing the cost of each token by an order of magnitude drastically cuts operational expenses for large language models (LLMs), multimodal systems, and server‑side AI services.

5× Performance Gains and 4× Fewer GPUs

Rubin’s architecture, a “codesigned” stack of six chip types including advanced GPUs and CPUs, delivers up to five times the inference performance of Blackwell and requires four times fewer GPUs for the same workload. This ARM architecture‑based feat reflects systemic efficiency, not just raw clock speed.

With tighter interconnects like NVLink 6 and super‑efficient memory systems (HBM4), the platform sustains 50 petaflops or more of AI compute density, a breadth once only achievable in top‑tier supercomputers.

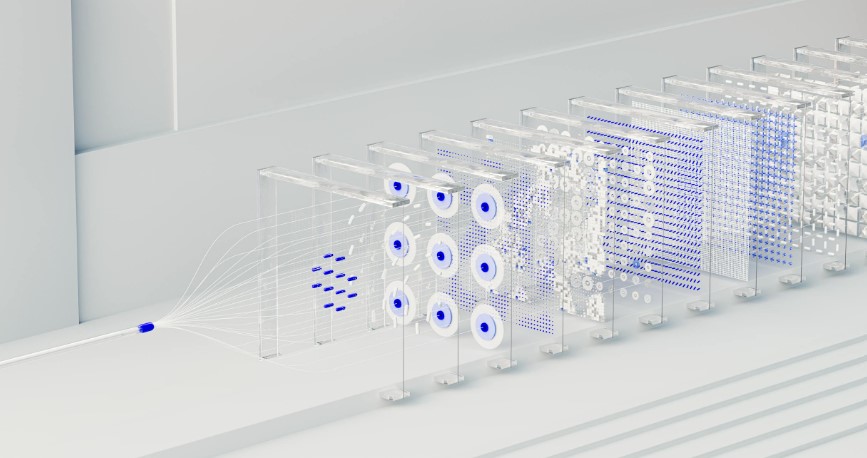

Engine Room, Six Chips, One Integrated Machine

To understand Vera Rubin’s significance, we must look at its foundational design. Instead of a standalone GPU or CPU, NVIDIA has packaged:

- Rubin GPU(s), the core AI compute engine

- Vera CPU, an 88‑core ARM‑based control and data throughput processor

- NVLink 6 Interconnects, ultra‑fast chip communication

- BlueField‑4 DPUs, offload data orchestration and security

- Spectrum‑X Ethernet Switches, power‑efficient network scaling

- ConnectX‑9 SuperNICs, high throughput device networking

This tight integration, known in industry terms as extreme codesign, ensures that data moves smoothly between compute, storage, and networking without bottlenecks. The result is a true rack‑scale “AI factory” capable of massive, sustained workloads that traditional architectures could only dream of.

The Vera CPU: More Than a Companion Chip

Often overlooked, the CPU in Rubin, dubbed Vera, plays a significant role in data orchestration. With dozens of custom Olympus cores designed specifically for AI workloads, it ensures that massive amounts of data stream to Rubin GPUs without idle cycles or computational waste.

Networking and Data Flow: Beyond Chip‑Level Computation

Historically, network and data movement have been the silent bottlenecks in AI training and inference. NVLink 6 and ConnectX‑9 technologies provide terabytes‑per‑second class bandwidth so that multiple GPUs behave like a unified compute cluster, dissolving the artificial boundaries between separate chips.

This shift in architecture signals the industry’s recognition that AI isn’t about isolated chips, it’s about orchestrating distributed intelligence at scale.

Intelligent Models Meet Real‑World Machines

Vera Rubin isn’t just about crunching numbers. During the CES keynote, NVIDIA CEO Jensen Huang emphasized “Physical AI”, a term describing AI that transcends pure data and operates within the physical world, in robotics, autonomous vehicles, manufacturing, and more.

Cosmos: The World Foundation for Physical Intelligence

Cosmos represents NVIDIA’s attempt to build AI that understands physical dynamics and environments through large‑scale simulation and real‑world training data. It generates realistic multi‑camera scenarios, synthesizes edge‑case environments, and performs physical reasoning, all building blocks for true embodied intelligence.

For robotics, this means systems that can plan, adapt, and interact at scale, not just recognize patterns but react meaningfully to the unpredictable nuances of physical reality.

Alpamayo: Reasoning AI for the Road

Rubin’s ecosystem extends to vehicles with Alpamayo, a family of reasoning models designed to handle real‑time decisions in autonomous driving. These models don’t just process sensor data; they reason about it, enabling higher levels of autonomy with safety and adaptability.

From robotics to autonomous vehicles, this integration of AI hardware and reasoning models marks a crucial inflection point: intelligence that thinks as well as computes.

Industry and Ecosystem Implications

Cloud Giants Align

Major cloud providers and AI labs,including AWS, Microsoft, Google Cloud, and specialized partners, have already signaled early adoption paths for Rubin platforms. With deployment slated in the second half of 2026, enterprises planning large‑scale AI infrastructure are already recalculating CAPEX and OPEX.

Economic Ripples Across AI Markets

Reducing inference costs by 10× reshapes the total cost of ownership in ways that go far beyond server racks. Smaller startups can now compete on innovation rather than budget. Entire sectors, from fintech to biotech, may explore AI deployments once deemed too expensive. Strategic alliances like the reported NVIDIA–OpenAI infrastructure partnership (targeting 10 gigawatts of deployment power) underscore the economic weight behind Rubin‑class systems.

Stock and Market Interpretation

Investor sentiment around NVIDIA has remained bullish, with analysts citing its dominance in AI infrastructure as a competitive moat. Rubin’s revealed production timelines and advanced integration have fortified NVIDIA’s position as a foundational pillar for AI’s next decade.

Challenges and the Road Ahead

While heralded as transformative, Rubin also highlights broader industry questions:

Energy and Thermal Management

With massive compute density comes huge energy demand. NVIDIA’s adoption of liquid cooling and novel hot‑water systems illustrates emerging solutions but also underscores how traditional data center designs might be phased out in favor of more advanced thermals.

Software and Orchestration Bottlenecks

Industry discussions suggest that the limiting factor may no longer be chip performance but system orchestration. Complex scheduling, real‑time data management, and parallel reasoning pose new software challenges even for large enterprises.

Conclusion: A New AI Paradigm

NVIDIA’s Vera Rubin platform isn’t just a next‑generation AI chip, it’s a systemic reimagining of how intelligence is produced, deployed, and integrated with the physical world. By slashing inference costs, integrating diverse compute fabrics, and knitting them into real‑world reasoning models, Rubin signals a future where AI isn’t locked in data centers but embedded in machines, environments, and industries across the economy.

For CEOs, CTOs, investors, and innovators alike, understanding Rubin isn’t optional, it’s central to mapping the future of AI.